My AI Code Review Journey: Copilot, CodeRabbit, Macroscope

As a developer, I’m always curious about tools that can make my workflow better. It’s part of the fun of the job, right? As a GitHub Star, I’m in a position to see a lot of new technology, so when AI-powered code review tools started gaining traction, I was naturally intrigued.

My journey wasn’t driven by a specific problem, but by sheer opportunity: CodeRabbit began sponsoring my work, the team from Macroscope reached out directly, and my work with GitHub meant I had early access to the latest GitHub Copilot features.

This gave me a unique chance to compare these tools in a real-world setting. I want to share my personal experience, the good, the bad, and the surprisingly funny way of letting an AI join my code review process.

First stop: GitHub review and the case of the mismatched ID

I started my experiment with the code review features built right into GitHub Copilot. My first impression? It felt like having a second pair of eyes with superpowers. It was catching everything from minor stylistic nits (like a double /** in a comment) to much more critical, fail-safe, and security-related problems.

A perfect example came up almost immediately while I was rewriting a backend service. In the process, I had refactored how user identification worked, moving from a session-based ID to a more direct attendeeId. I was sure I had updated everything, but the AI caught something I’d completely missed.

Here’s the report it gave me on a pull request, which practically wrote the bug report for me:

The problematic line of code was subtle:

// The 'attendeeId' here was a public-facing ID, not the internal database ID.const attendee = await AttendeeService.updateAttendee(eventId, attendeeId, { name, email, company });The AI correctly identified that my service expected an internal key, a unique identifier used by the database, like a primary key. I was mistakenly passing it the public-facing attendeeId. This is exactly the kind of subtle but critical bug that can become a real headache to debug later down the line.

The solution involved a change in both the code and the database. To ensure the key was always unique for a given attendee at a specific event, I changed the logic to use a composite key. This is a common database pattern where you combine two or more columns to create a single, unique identifier. For me, that meant concatenating the eventId and the attendeeId. The AI’s insight here didn’t just fix a bug; it pushed me toward a more robust data model.

Next up: CodeRabbit’s in-depth analysis

After getting comfortable with GitHub Review, I gave CodeRabbit a try. I must admit, the most immediate difference was the depth of the reviews. CodeRabbit often provided more suggestions, but what really stood out was how it presented them. It would give me the exact lines to change, the updated code, clear reasoning, and even a custom prompt to feed to another AI if I wanted it to implement the change for me.

A great example was when it found a classic null-check issue. It reported:

If

AttendeeService.getAttendeeByAttendeeIdreturns null/undefined for an unknownattendeeIdCode, the subsequent access toattendee.id,attendee.name, etc. will throw and be caught as a 500. That’s both a runtime risk and the wrong status code for “not found”.

CodeRabbit provided this exact diff, which made the fix incredibly simple:

const attendee = await AttendeeService.getAttendeeByAttendeeId( eventId, attendeeIdCode);const activities = await AttendeeService.getAttendeeActivitiesDetailed( eventId, attendeeIdCode);const attendee = await AttendeeService.getAttendeeByAttendeeId( eventId, attendeeIdCode);

if (!attendee) { return { status: 404, body: JSON.stringify({ error: "Attendee not found" }), };}

const activities = await AttendeeService.getAttendeeActivitiesDetailed( eventId, attendeeIdCode);But it didn’t stop there. It also adds a prompt which you can pass to another AI tool to update the code for you:

In

around lines 55 to 78, add a null-check after calling AttendeeService.getAttendeeByAttendeeId and if it returns null/undefined return a 404 response (with JSON error body) instead of continuing and causing a runtime error; only access attendee.id/name/email/company after the check, and verify whether AttendeeService.getAttendeeActivitiesDetailed expects attendeeIdCode or the internal attendee.id — if it expects the internal id, call it with attendee.id after the null-check.

This is what elevates a tool from a simple linter to a genuine partner. In some cases, it also thinks about the next potential bug.

It’s great to have a partner that flags potential issues, but the AI’s suggestions always require a human review. I verify recommended changes against business logic, edge cases, security considerations and test expectations before accepting them. AI can propose diffs and point out problems, but it can also misunderstand intent or make unsafe assumptions about APIs or data. I treat its output as a well-informed proposal, a huge time-saver, not an automatic commit.

A different perspective: Macroscope for the big picture

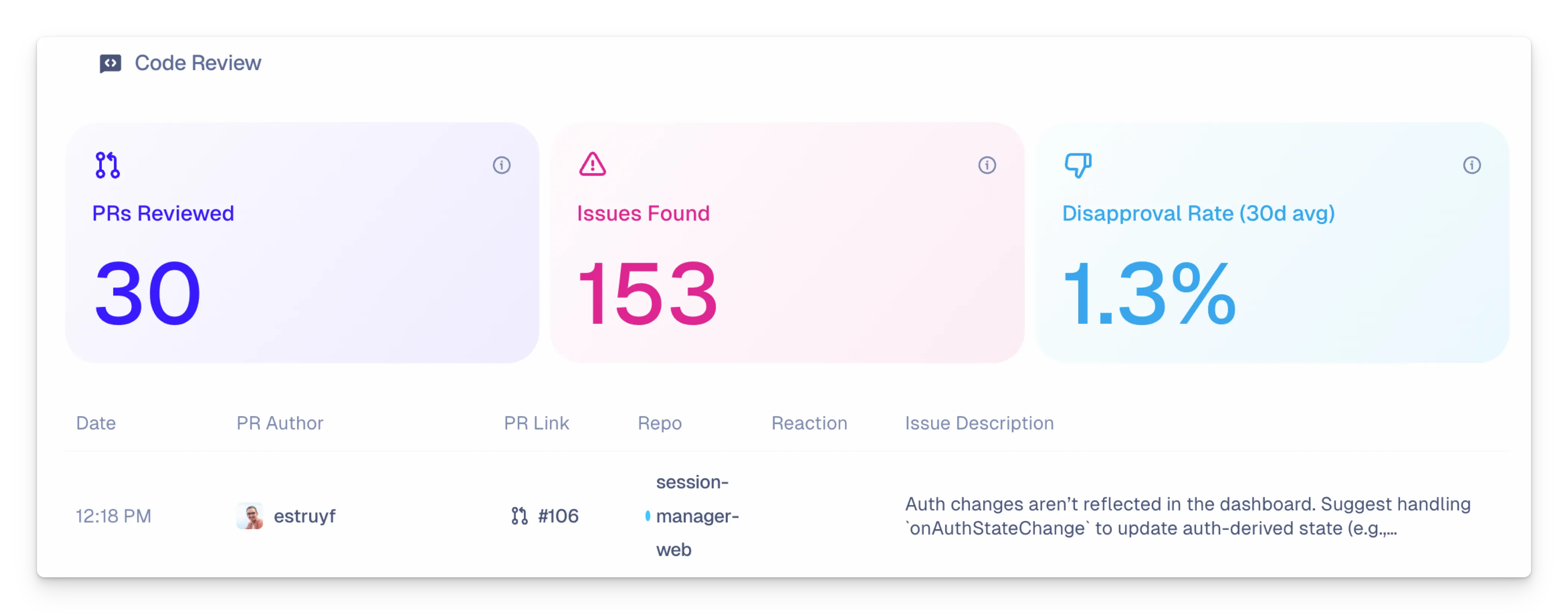

The third tool I tried, Macroscope, offered a different angle. While its code review suggestions were similar to the others, its main strength was its project management dashboard.

For me as a developer, this was interesting because it provides a bridge to the less technical members of the team. The dashboard gives product and project managers a high-level overview of a project’s pulse: code commits, review velocity, open issues, and more. With integrations for tools like Jira and Slack, it becomes a valuable hub for team-wide coordination.

The quirks and realities of AI review

Using these tools isn’t always a straightforward path. One of the funniest things I discovered is that on every change, the AI keeps finding new things to comment on. You can end up in a sort of “endless loop” of refinement if you’re not careful.

I had one funny instance where I followed an AI’s suggestion to add extra validation logic. After a couple more iterations, the AI came back and reviewed its own suggestion, concluding that the code was now “too difficult to understand”. The fix it proposed? Basically the original code I had written in the first place!

My final verdict and advice

So, what’s my biggest takeaway after this journey?

1. AI is a fantastic safety net. I had another bug where I wrote a utility function to filter arrays but forgot to use the newly filtered arrays in my return statement. All the AI tools caught this subtle logic error that I might have missed until testing. They excel at this kind of static analysis.

2. The AI actually learns. These tools aren’t static; they adapt. For instance, an AI suggested I use context.log.error() for logging in my Azure Functions. This was correct for the V3 model, but I use V4, which requires context.error(). I provided that feedback in the review, and I never saw that incorrect suggestion pop up again.

3. The human is irreplaceable. This is the big one. Once the AI review is done, a human review is still essential. The AI is great at catching code-level issues, but it doesn’t understand your business goals, your team’s coding standards, or the long-term vision for a feature. Don’t just blindly accept every suggestion. Treat the AI as a helpful junior/medior developer who still needs your senior guidance.

4. It’s an amazing documentation time-saver. An unexpected bonus was the AI-generated pull request summaries. CodeRabbit, for example, provided a detailed summary for one PR that was better than what I would have quickly written myself. This gives approvers instant context and frees up developer time—a win-win.

In the end, I believe AI code reviews are a valuable next step in software development. They catch bugs faster, they learn from your input, and they free up human developers to focus on the bigger picture. My advice? Dive in, but do it with an open and critical mind. Treat the AI as a new, incredibly sharp-eyed junior developer on your team—one that can help you a lot, as long as you provide the senior guidance.

Related articles

Why code review and testing matter more than ever with AI

Explore why code review and testing are essential in the age of AI to ensure quality and reliability in your development workflow.

The Future of Documentation: Ready for Change?

Discover the latest advancements in documentation technology and how tools like GitHub Copilot for Docs, Mendable, and OpenAI are changing the game.

From idea to production in under an hour with AI

How I used GitHub Copilot and AI-powered development tools to build a complete VS Code extension stats dashboard from scratch in record time.

Report issues or make changes on GitHub

Found a typo or issue in this article? Visit the GitHub repository to make changes or submit a bug report.

Comments

Let's build together

Manage content in VS Code

Present from VS Code

Engage with your audience throughout the event lifecycle